“… I found things that even more people believe, such as that we have some knowledge of how to educate. There are big schools of reading methods and mathematics methods, and so forth, but if you notice, you’ll see the reading scores keep going down–or hardly going up–in spite of the fact that we continually use these same people to improve the methods. …

I think the educational and psychological studies I mentioned are examples of what I would like to call cargo cult science. In the South Seas there is a cargo cult of people. During the war they saw airplanes with lots of good materials, and they want the same thing to happen now. So they’ve arranged to make things like runways, to put fires along the sides of the runways, to make a wooden hut for a man to sit in, with two wooden pieces on his head to headphones and bars of bamboo sticking out like antennas–he’s the controller–and they wait for the airplanes to land. They’re doing everything right. The form is perfect. It looks exactly the way it looked before. But it doesn’t work. No airplanes land. So I call these things cargo cult science, because they follow all the apparent precepts and forms of scientific investigation, but they’re missing something essential, because the planes don’t land.”

When I was an undergraduate, one of the best modules I took was ‘applications of psychology’ lead by the then head of psychology, Professor Ian Howarth. One of Howarth’s observations, which has stuck with me ever since, was the nonsensicality of business advice which focused on the practices of the top performing companies. What does such analysis actually tell us? How do we know that the bottom performing companies aren’t doing the same thing? It might be the case that the most successful and the least successful companies do many of the same things – or that these factors are actually irrelevant to building a successful company, or simply necessary but not sufficient for success.

There’s the same problem with the drive for school improvement. Frequently, ‘top performing’ schools (or school systems in other countries) are picked out for praise and their ‘best practices’ shared with others. However, for comparisons between schools to be of any value in improving the service they provide for children, any analysis requires that the judgements of school performance are valid and reliable.

Without valid and reliable judgements, school improvement advice devolves to the level of ‘cargo cult’ science. In the absence of a genuine understanding of what improves schools, we risk (ineffectively and pointlessly) merely emulating the appearance of success.

The consequences of high-stakes school performance judgements

Judgements of school quality can create a vicious circle of problems:

Firstly, an unfavourable judgement appears to affect parental choice of schools differentially. There’s some evidence to suggest that middle-class parents are more likely to select schools based on Ofsted judgements than working-class parents:

“Drawing on interviews with parents, qualitative studies provided evidence that middle-class parents are particularly anxious about securing a place for their child in a ‘good school’ and, as a result, are very active in the school choice process.10 In contrast working-class families are more concerned about finding a school where they feel at home, in order to avoid rejection and failure.”

Secondly, an unfavourable judgement by the regulator has significant consequences for recruitment of teachers and school leaders. It is difficult to recruit school leaders in schools which face challenging circumstances.

“Right now, good people are being turned off becoming headteachers because the element of risk involved in the job has increased significantly. We’re in a situation where the knee-jerk reaction is that if a school has problems, the answer is to get rid of the head. It’s the football manager mentality, whereas what schools need is stability, and what heads need is constructive support, not the adversarial system we’re in now where school inspections are hit-jobs.”

The high stakes created by Ofsted have led unions to warn that leading a challenging school is effectively a form of ‘career suicide’ for headteachers:

“Ofsted was wielding a “Sword of Damocles” over “any senior leaders foolish enough to think that they will be sufficient to undertake the tricky work of turning round schools with seriously entrenched problems,” Mary Bousted, general secretary of the Association of Teachers and Lecturers, told her union’s annual conference in Manchester.”

Additionally, poor judgements also exacerbate teacher recruitment problems. Indeed, Ofsted itself has noted that challenging schools face an even greater problem in recruitment – as a result of their judgements:

‘Speaking as the report was published, Sir Michael added: “We also face a major challenge getting the best teachers into the right schools.

‘“Good and outstanding schools with the opportunity to cherry pick the best trainees may further exacerbate the stark differences in local and regional performance. The nation must avoid a polarised education system where good schools get better at the expense of weaker schools.”’

Ofsted ratings of schools are not worthless, but whilst they are treated as infallible judgements of school quality – I strongly suspect they are doing more harm than good. It won’t happen this side of an election – where talking tough on schools appears to be “the winning strategy” – but the way forward likely involves lowering the immediate stakes of school accountability in line with the validity of the judgements.

So how valid are Ofsted judgements of school performance?

Some validity issues with school performance measures

The key measure of a state school performance in England is that school’s Ofsted rating. However, there are serious questions about the validity these judgements. One issue is the reliability of the judgements. If a measure is unreliable (i.e. a different inspection team would often reach a different verdict) then the measure is not a valid one. We know that the validity of judgements of teaching quality from observations is suspect precisely because of this reason.

“a number of research studies have looked at the reliability of classroom observation ratings. For example, the recent Measures of Effective Teaching Project, funded by the Gates Foundation in the US, used five different observation protocols, all supported by a scientific development and validation process, with substantial training for observers, and a test they are required to pass. These are probably the gold standard in observation (see here and here for more details). The reported reliabilities of observation instruments used in the MET study range from 0.24 to 0.68.

“One way to understand these values is to estimate the percentage of judgements that would agree if two raters watch the same lesson. Using Ofsted’s categories, if a lesson is judged ‘Outstanding’ by one observer, the probability that a second observer would give a different judgement is between 51% and 78%.”

Ofsted are aware that such inconsistency may also undermine the validity of the judgements of schools reached by inspection teams, thus they have set up a pilot to examine the reliability of inspection outcomes.

‘Ofsted said: “The reliability of the short inspection methodology will be tested during the pilots by two HMIs independently testing the same school on the same day and comparing judgements.”

‘Ofsted said that inspectors would continue using data as a “starting point” in all inspections.

‘“However in reaching a final judgement, inspectors consider the information and context of a school, as shown by the full range of evidence gathered during an inspection, including evidence provided by a school.”’

Regardless of the outcome, this probably won’t reassure school leaders, in my opinion, for several reasons:

Lack of blinding protocols

One major reason why successful trial results might be rejected by Ofsted’s critics may be the lack of independence of the judgements made by inspection teams. At the moment, the proposal appears to be that two inspection teams would enter a school on the same day and form separate judgements of overall effectiveness (presumably alongside separate assessments of achievement, quality of teaching, behaviour, leadership). However, for any agreement between these grades to be convincing evidence of reliability, the ratings would have to be truly independent from one another.

One threat to these trials is the absence (at time of writing) of any ‘blinding’ protocols. In research the system used to ensure that observations or measurements are genuinely independent is called ‘blinding’.

‘Blinding’ is particularly used in medical research. For instance, where a new drug is being tested against a placebo, the participant in the trial doesn’t know whether they are receiving the real treatment or not. Indeed, in high quality studies this is taken further (as a ‘double-blind’ trial) and the doctor giving the treatment also doesn’t know whether the patient is receiving a real or placebo drug. The purpose of these protocols is to reduce the potential bias created by the expectations of the patient and the doctor, so that if a difference is found between the effectiveness of the real drug versus the placebo it can be confidently related to the effect of the drug rather than the reactivity of patient or the (unconscious) bias of the doctor.

Anchoring effects in judgements of performance

In the absence of some form of blinding, agreement between two inspection teams won’t necessarily mean that the system of forming judgements about schools is reliable. It may simply mean that unconscious bias has distorted the two judgements so they fall into closer agreement.

One obvious source of bias is the anchoring effect that a review of prior data before the inspection would produce. There is a widespread belief that inspection judgements are too heavily influenced by the data they see on a school before the team even steps foot in the building.

Whilst we might hope that inspection systems somehow compensate for this bias, the evidence appears to suggest that it is a significant problem.

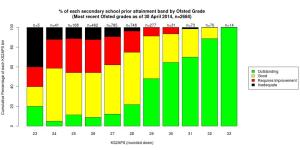

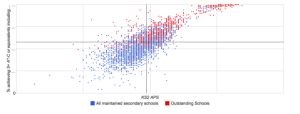

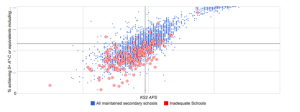

For example, Trevor Burton conducted an analysis of Ofsted judgements against prior attainment.

“So, it is easy. If you want your secondary school to get an Outstanding Ofsted grade and you want to avoid RI or Inadequate, make sure your pupils’ previous attainment on intake is as high as possible.

“Depressingly, this is our accountability system. This is why it is getting difficult to recruit good people to struggling schools. The odds are against them in more ways than the obvious ones.”

A more recent analysis by Kristian Still points to the same conclusion:

“All schools, regardless of their starting point, have a responsibility to do their utmost to secure the very best outcomes for each pupil in their school. However, to address the balance of the observations that have been made, is it not right that judgements of effectiveness (and indeed achievement) give appropriate consideration to the attainment profiles of every school.”

I contend that this strongly suggests that there is a powerful anchoring effect at play when inspection teams make judgements of schools. It appears that reviewing attainment data prior to inspection ‘anchors’ the judgement that inspection teams finally reach. Once anchored, impressions of the school’s effectiveness will likely gravitate towards that prior expectation (what is sometimes called the ‘halo or horns’ effect).

In the case of two teams inspecting on the same day to establish reliability, then I suspect this anchoring effect might have even greater sway. Inspection teams concerned that their judgements would be at odds might tend to rely much more on the objective data about the school than their subjective judgement on the day. Such expectations about whether the quality of a school is likely to be ‘good’ or ‘requires improvement’ formed prior to inspection would be like telling the patient whether they have received the real drug or a placebo. Thus, even if the pilot is successful, it would not necessarily tell us the genuine reliability of inspection judgements.

The problems of ‘progress measures’

Reliability is a necessary but not sufficient component of validity. In other words, a judgement can be consistently wrong. Thus, it is worth exploring whether the measures of progress used to make judgements of school effectiveness are in-and-of-themselves valid.

The fact that school performance judgements correlate strongly with the prior attainment of students suggests that attainment data (e.g. 5+ A*-C incl English and maths) may be acting as an anchoring effect on inspection judgements. Will progress measures (e.g. 3 levels of progress across a key stage) provide a more valid basis from which to make judgements about schools?

There are reasons to think it may not:

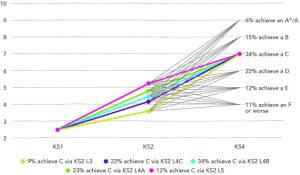

For instance, Henry Stewart suggests there’s reason to believe that the ‘progress’ students make across a key stage is highly related to their prior attainment. In other words, ‘high band’ students are much more likely to make 3 levels of progress than ‘low band’ students.

“Across England 81% of “high prior attainment” students (those on level 5 at age 11) make 3 levels of progress in Maths, but only 33% of “low prior attainment” students (those on level 3 or below at age 11) do so.”

Indeed, the 3 levels of progress measure appears to give a fairly easy ride for schools which can select or attract students with higher prior attainment scores:

“High expectations are a good thing. But this measure gives a low expectation for the level 5s while providing a very tough one for level 3s. The effect is that whether a school is deemed to be making sufficient progress with its students is much more likely to be defined by the intake than by the value it adds. Rather than balancing the absolute 40% measure, it reinforces any bias due to the low level of achievement at age 11.”

This isn’t just a problem for schools which have low prior attaining cohorts. It’s interesting that a lack of ambition embedded into the 3LoP measures – where KS2 students attaining a 5a are only expected to gain a grade B at GCSE in order to make ‘Expected Progress’ – is now being reported as a ‘failure’ of state schools rather than a distortion created by the accountability system:

“almost two thirds of high-attaining pupils (65%) leaving primary school, securing Level 5 in both English and mathematics, did not achieve an A* or A grade (a key predictor to success at A level and progression to university) in both these GCSE subjects in 2012 in non-selective secondary schools.”

In a couple of years, of course, all this will change with the movement to ‘Progress 8’ as a replacement to levels of progress.

However, some commentators are already pointing out that some aspects of this replacement system will still play to the same prior attainment biases:

“So let’s get this straight… “The progress 8 score improves equally, regardless of the grades they are moving between?”

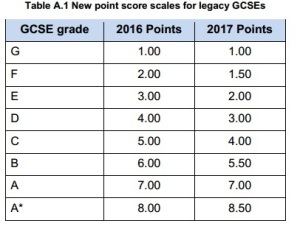

“In 2017, a student moving up a grade at the top of the grading scale, receives a point score increment three times of someone lower down the scale. Is that fair? …

“What this could mean is that a school teaching all of its students equally would be better served focusing on those more likely to attain the higher grades. i.e. by its design the progress 8 measure in 2017 could be encouraging prioritisation of the students with higher prior attainment over those with lower “ability”.”

There are lies, damn lies, statistics … and then school data?

Jack Marwood has identified a number of fundamental problems with the way that school data is analysed and reported. He picks out RAISEonline for particular criticism:

He makes the point that it isn’t clear what RAISEonline is actually testing:

“If you want to test whether the test results for a given school is statistically significant when compared to a national mean and standard deviation, as RAISEonline does, you are effectively testing a ‘school effect’. Is there something about this school which makes it different to a control sample, in RAISEonline’s case all the children contributing results for a given school year?

“So what does make the school different? Is it the quality of the teaching and learning, as RAISEonline implicitly assumes? Is it a particular cohort’s teaching and learning? Is it the socio-economic background of the children? Is it their prior attainment? Is it their family income? Or is it a combination of these factors?”

He also points out some fairly fundamental issues with the assumptions underlying the RAISEonline analysis of school performance. Many of these statistical assumptions simply do not match the reality of the school system. For example:

- Children are not randomly allocated to schools.

- Children attending a school are likely to be similar to each other, making their data related.

- The piecemeal development of Keystages and sublevels means that the data is compromised before any analysis begins.

- Age differences between summer and winter born children are ignored

- Creating average point scores (APS) renders analysis meaningless

- The use of the term ‘significant’ is used in a way contrary to its definition by statisticians.

He summarises:

“You are stuffed from the outset, as RUBBISHonline is likely to show up all kinds of warnings because it’s using the wrong, and incorrectly applied, tests of significance which don’t even stand up to elementary statistical scrutiny.”

Non-linear progress

Of course, most school measures presume the validity of the concept of linear progress across a keystage. Many schools now talk about trajectories when discussing student progress – embodying the assumption that it is reasonable to expect children to make such linear progress.

However, according to a recent report “Measuring pupil progress involves more than taking a straight line” this assumption may not have an evidence-base to support it:

“We have an accountability system that has encouraged schools to check that children are making a certain number of sub-levels of progress each year. This is the basis on which headteachers monitor (and now pay) teachers and on which Ofsted judges schools. Yet there is little hard science underpinning the linear progress system in use.”

It turns out that there may be numerous pathways by which children reach their attainment at 16 and that almost all children – at some stage across their school life – will be deemed as underachieving:

This volatility in outcomes is especially high for children with low attainment at key stage 1 and implies that the tracking systems used (by schools and Ofsted) to identify students as ‘on target’ or ‘off track’ are based on invalid assumptions about how children progress through school. These labels have a large impact on what happens to those students, their teachers and ultimately their schools.

“The vast majority of pupils do not make linear progress between each Key Stage, let alone across all Key Stages. This means that identifying pupils as “on track” or “off target” based on assumptions of linear progress over multiple years is likely to be wrong.

“This is important because the way we track pupils and set targets for them:

- influences teaching and learning practice in the classroom;

- affects the curriculum that pupils are exposed to;

- contributes to headteacher judgements of teacher performance;

- is used to judge whether schools are performing well or not.”

Is Ofsted inadvertently driving a ‘cargo cult’ of school improvement?

It is difficult to understand why anyone would defend the current high-stakes performance measures currently applied to schools: Many of the statistical assumptions are flawed; children do not make ‘linear’ progress, the progress measures are distorted to favour high prior attainment, and attainment measures likely ‘anchor’ inspector judgements. Among the damaging consequences of a system which applies high-stakes accountability using performance measures which lack validity is the excessive workload it creates for teachers, the difficulties it compounds for teacher recruitment and retention, and the problems it produces in attracting school leaders to challenging schools.

Low validity in Ofsted judgements would imply that there are schools rated RI or even Inadequate which have received those judgements by virtue of the volatile progress of their students – but are perhaps doing some very worthwhile things to help those students get the most out of their education. On the other hand, there are likely some schools rated Outstanding which are actually coasting along relatively ineffectually, but protected by the fact that they attract or select higher attaining pupils.

Perhaps one of the most damaging consequences of a high-stakes/low-validity accountability system is that we continually fail to learn very much about what makes a school effective. Instead, it encourages a ‘cargo cult’ approach to school improvement:

Gimmicks and pet projects from ‘outstanding’ schools or teachers – which may have nothing to do with improved outcomes for students – are copied across schools as ‘best practice’.

Teachers and school leaders engage in time-consuming activities – which may have nothing to do with improved outcomes for students – in the hope that Ofsted lands them a good judgement.

Where the system we use to make judgements of schools possesses so many problems, how many of the ‘failures’ of state schools are ‘iatrogenic’? As David Didau says in the report above:

“Measurement has become a proxy for learning. If we really value students’ learning we should have a clear map of what they need to know, plot multiple pathways through the curriculum, and then support their progress no matter how long or circuitous. Although this is unlikely to produce easily comprehensible data, it will at least be honest. Assigning numerical values to things we barely understand is inherently dishonest and will always be misunderstood, misapplied and mistaken.”

Reblogged this on The Echo Chamber.

LikeLike

Brilliant blog. I am just chuckling at the thought of judgements having to be made ‘blind’ just through the school visit and seeing what quality of teaching judgements that leads to. It is all so silly.

LikeLike

Wouldn’t it be interesting to see what judgements would be reached in the absence of any review of data on attainment or progress? It’s likely impossible in practice – thus anchoring effects probably act as a fundamental limitation in the validity of judgements of school effectiveness.

LikeLike

Although I can see that progress measures clearly don’t currently make for equitable judgments about schools with contrasting intakes… isn’t it because of anchoring judgments that we’re able to move away from a system of trying to judge quality of teaching based on lesson grades?

LikeLike

Graded lesson observations lacked reliability (and therefore validity) – therefore it’s right that they have gone. Ofsted claim that data is only one factor in the judgement forming process when grading school performance. The fact that it appears to strongly anchor those judgements is therefore disturbing – especially as even progress measures strongly favour schools which select or attract higher prior attainment pupils!

LikeLike

Thank you – I do agree with you. I am intrigued where this could all be headed. If inspectors can’t make reliable judgments about teaching and learning based on progress statistics, but also can’t measure it in individual lessons, what CAN they make any reliable judgment from? Would the idea be to quantify a general impression about teaching quality from seeing multiple lessons, and then this would be cross-referenced against the impressions of the alternative inspection group seeing different lessons? … And then whatever progress data there is can be factored into the ‘impression’ afterwards? Can we ever be more objective than that?

LikeLike

I think the answer isn’t that Ofsted judgements could ever be 100% accurate or reliable, but that the ‘stakes’ of an inspection judgement have to be moderated in line with the limitations of the validity of the measurement. In other words, a movement towards a focused and formative assessment of where a school’s strengths and areas for development are – and a source of external feedback on the progress school teams are making on developing those areas – rather than a ‘Sword of Damocles’, summative judgement.

LikeLiked by 1 person

…Yes! Of course I guess that was your point all along 😀 Thank you for the post and the discussion.

LikeLiked by 1 person

This is so interesting. My children’s school went from supposedly ‘outstanding’ to ‘inadequate’ and is currently in special measures. This seems to have been largely based on the progress made by a few lower prior attainment students. The school had stuck with GCSE’s rather than BTEC’s and this had lowered the percentage getting 5 A-C inc English and Maths. I have not met a single parent or student that believes the grading is accurate. Perhaps once people have personal experience they realise just how flawed the current system is.

LikeLiked by 1 person

Good points. How do we extract our teachers and our school leaders from the thrall of misguided statistics and ‘best practice’, though? It wasn’t so long ago that I had to make a strong argument in my ‘appraisal’ against the use of pupil points progress as a measure of my effectiveness as a teacher. In fact, it was of no consequence to me on UPS3 and although they accepted my argument, they proceeded to use this to judge eligibility for performance-related pay for other members of staff!

LikeLiked by 1 person

recommend you read around before rushing to judgement about Progress 8 in the section “The problems of ‘progress measures’”, its nothing sinister just a one year adjustment between one points syste and another, read comments from the originator of the method https://principalprivate.wordpress.com/2015/03/08/what-i-am-learning-about-their-determination-to-perpetuate-unfairness-in-accountability-systems/comment-page-1/#comment-189

LikeLike

Good to know – will take a look!

LikeLike

Pingback: While looking for something else…: Items to Share: 8 March 2015 | The Echo Chamber

Pingback: How do we develop teaching? A journey from summative to formative feedback | Evidence into practice